The moment you make your first AI music track can feel quite magical, especially if you are as profoundly unmusical as me.

I cannot hold a note or even reliably maintain a beat, yet in 30 seconds I can now make an entire pop song, lyrics and all.

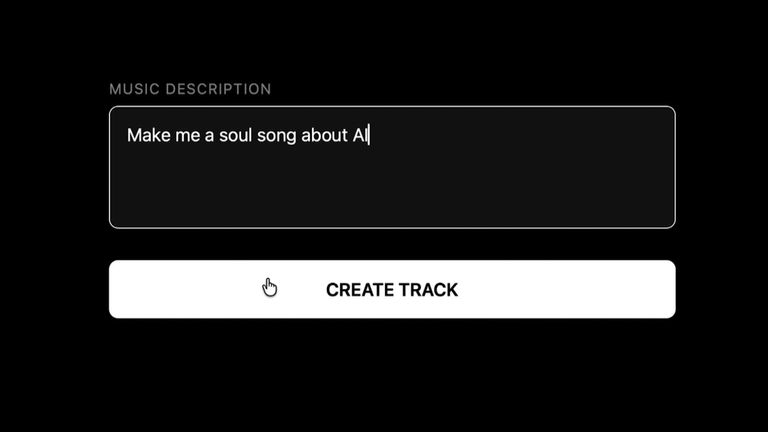

Or a soul song. Or a metal track. Whatever comes to mind – you just tell the AI engine what you want and it does the rest. The output might be a little generic, but as far as listeners are concerned it’s indistinguishable from the real thing.

Even people far more musically aware than me cannot tell the difference between human and artificial songs. The musical Turing Test has been well and truly passed.

AI music is a perfect example of the power of artificial intelligence to take complicated tasks and automate them to a standard that was unimaginable only a few years ago.

Yet, as ever with technology, removing friction comes with a cost.

I have spent the last few months investigating AI music. What has emerged is a picture of a vast attempted fraud, as technologically-equipped criminals use AI tools to try and take billions of pounds away from real-life musicians.

The fraud takes place in two stages which sound like something from a science-fiction novel, but are now part of everyday life in the hidden world of the internet economy.

First, the fraudsters make huge amounts of AI music. Then, they build bots to stream that music over and over again and thereby make some royalties.

Yes, this is a case of robots listening to robot music. The defence? More robots. Let me explain.

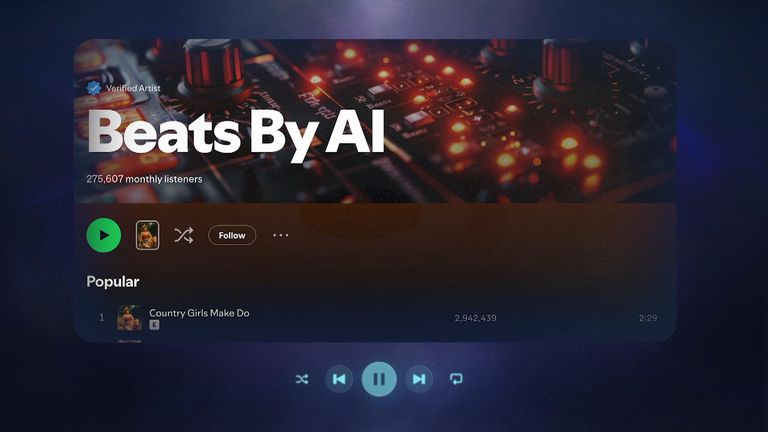

Thanks to the ease with which AI music can be made, production of it is already reaching an industrial scale.

The best figures we have on the number of AI tracks being released come from Deezer, a streaming site which is the French equivalent of Spotify or Apple Music. It estimates that 60,000 fully AI tracks are being uploaded to its site every day, over a third of all production.

To put that into context: in 2015 the entire US music industry made around 57,000 songs.

A decade later, Deezer is set to receive 21 million AI tracks a year – and this is a conservative estimate, because the scale of AI music production is growing by the month.

“It’s a way to totally flood the music streaming services,” says Romain Hennequin, head of research at Deezer, who has developed an algorithm to detect AI music being uploaded to the platform, picking up tiny features of the music which are inaudible to the human ear.

The tracks themselves are not actually fraudulent, but the behaviour around them is.

Someone will upload an AI track then use an automated system – a bot – to listen to a song again and again in order to make royalties from it.

This isn’t a minor aspect of AI music perpetrated by a few bad apples.

According to Thibault Roucou, Deezer’s head of royalties, “the vast majority of the listeners of this content is in fact what we call stream manipulation or fraud”.

His algorithms, which pick up unnatural activity on the platform in the same way that a bank looks for unusual activity around payments, suggest that as much as 85% of all listens on fully AI music are fraudulent.

This is not just an issue for Deezer, but also for artists, because of the way that payments work on streaming services.

There is no set price for a single stream; instead, artists get paid from a common royalty pool depending on the proportion of streams they get.

This means that if someone generates a huge number of streams, they will take money away from everyone else, by reducing the amount in the common pool.

The sums involved are extremely large. “We detect 8 to 9% of the stream as being fraudulent,” says Alexis Lanternier, CEO of Deezer.

“If you implement this 8% to the world of music, it’s roughly a few billion dollars, two to three.”

‘An ongoing battle’

Deezer says it is identifying the bots using their own automated systems in order to prevent the tracks they stream from generating royalties, but the fraudsters are always looking for new ways to get past the defences.

“It’s an ongoing battle,” says head of royalties Roucou.

“I think we will not lose, but we will not win anyway because they will continue to improve, and we will also. And we hope we will be able… to prevent them from taking too much money from other artists.”

When I spoke to human artists about the situation, they were shocked.

“As artists, we get such a small fraction of the money that we actually deserve to get because of the streaming system. And for that to just be getting cut shorter and shorter and shorter through robots… it makes my blood boil,” says folk musician Lila Tristram.

“The music industry needs to get their hands around this a little bit, otherwise it could rapidly get quite out of control,” says Aidan Grant, founder of music production agency Different Sauce.

But what can be done? The genie of AI music is out of the bottle now, so currently the debate is on the best way to alert consumers and musicians.

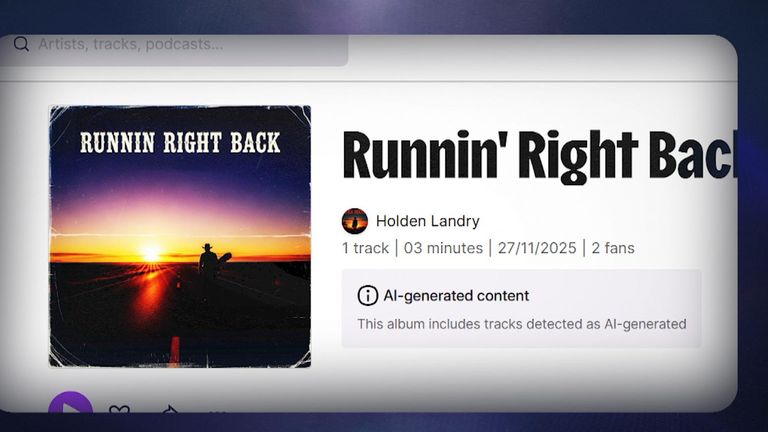

Deezer have decided to label fully AI tracks as AI, but right now they are the only streaming site to take this approach.

Spotify, the world’s biggest streaming service, has opted against it, for fear it might stigmatise musicians who used AI, a potential problem for a future in which every track is made using some kind of artificial assistance.

Read more from Sky News:

Driverless cars are coming to London ‘this year’

Man given Musk’s AI brain chip says it feels ‘magical’

Neither YouTube nor Apple Music label AI tracks. YouTube said it asks creators to mark AI as AI if it looked realistic. Apple didn’t respond to a request for comment.

Where Spotify has joined Deezer is in trying to block the flood of AI music and fraudulent streams.

Last year, it removed 75 million spam tracks, many of which will have been AI. For context, Spotify’s whole catalogue stands at 100 million.

The one silver lining for musicians? Although some AI tracks have gathered a lot of hype and generated millions of streams, so far there is no great appetite for it outside the occasional one-off viral hit – and of course the fraudsters.

It seems that music still needs to be played and promoted by someone with genuine personal appeal for it to find a meaningful audience. The human connection matters… for the moment anyway.